Jimmy Lin, Chief Scientist and Professor, University of Waterloo

A little while ago, we announced first-class support in Yupp for rendering SVG responses in our side-by-side comparison of models. Today, we’re pleased to announce a leaderboard that ranks frontier models specifically on their ability to generate coherent and visually appealing SVGs based on organic user preferences, representing a direct evaluation of the model reasoning and coding capabilities. We’re also sharing a small open dataset of around 3.5K public SVG prompts, model responses, and user preferences for model builders and researchers.

Yupp is a fun and easy way to try out and compare the latest AI models (800 and counting) – all for free! Instead of just one model responding to your prompts, we show two or more responses side by side and let you compare. You tell us which response you prefer and why, and this signal provides valuable feedback to help model builders improve their models. Now you can prompt AI models to generate SVGs!

What’s SVG?

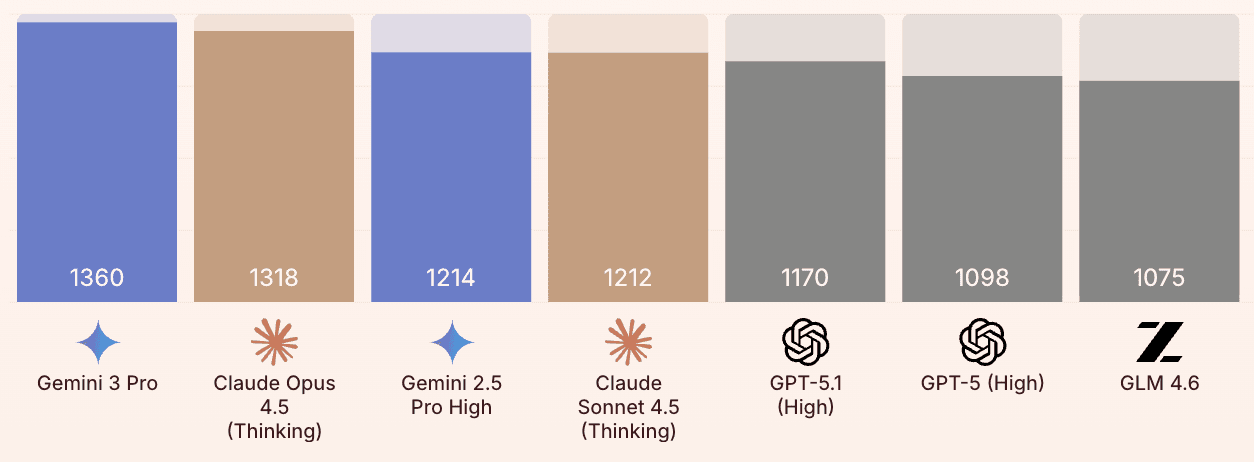

What are these, you ask? It’s best explained with an example. Here are renditions of a cute polar bear by Gemini 3 Pro on the left and Claude Opus 4.5 (Thinking) on the right:

SVG (Scalable Vector Graphics) is an XML-based format for describing two-dimensional graphics that can scale to any size without losing quality. Unlike raster images such as JPEG or PNG, which store pixels, SVG encodes shapes – lines, curves, polygons, text, and more – using mathematical instructions. This makes SVG ideal for sharp icons, diagrams, charts, and illustrations on the web. Because it’s text-based, SVGs are lightweight, easy to edit or generate programmatically, and can be styled with CSS or manipulated with JavaScript. Additionally, SVGs also support interactivity and animations, making them a powerful and flexible choice for modern web graphics.

Here’s another example, where Claude Opus 4.1 and GPT-5 Codex (Medium) offer their interpretations of a mandala, complete with animations. The final result from GPT-5 Codex (Medium) is mesmerizing:

There’s no doubt that getting AIs to generate SVG is fun. But we also think it’s interesting, from two main perspectives, discussed below.

SVG is Code Generation

First, SVG is code! That is, getting AIs to generate SVGs is, in fact, getting the AIs to generate code. It’s not “painting pixels” – it’s programming a geometric scene. Thus, SVG generation exercises the same capabilities needed for writing Python or JavaScript, but with more spatial constraints. The code needs to be both syntactically correct (e.g., issue valid commands, ensure tags are properly balanced, etc.) and semantically correct (i.e., responsive to the user prompt in drawing a coherent scene).

For example, here’s the model-generated snippet of code for drawing the cute polar bear above:

When users prompt AIs to generate SVGs, they are actually assessing an AI’s software engineering ability. Preferences for one model’s output, in fact, can be viewed as endorsement for that model’s ability to perform coding tasks over another model’s. Another way to think about this is that SVG generation brings mass consumer appeal to coding. Furthermore, everyday users – even non-programmers – have little difficulty in assessing the quality of SVGs, which expands the pool of people who can help improve frontier models.

In short, SVG generation capabilities in Yupp provide a bridge between everyday users and one important use case of AI: software engineering.

SVG Generation Probes Model Capabilities

Second, SVGs probe internal representations of AI models. When an LLM can generate coherent, semantically meaningful SVGs, it subtly reveals aspects of its “understanding” of the world and objects within it. Specifically, a lot of “knowledge” needs to come together:

Models need to have internal representations of objects that capture hierarchical and spatial relationships. They need to have learned that faces typically have two eyes, a nose, and a mouth. That rabbits have long ears and bears have short ears, but in both cases, ears appear on top of the head. Looking at the example above, both Gemini 3 Pro and Claude Opus 4.5 (Thinking) demonstrate this capability, at least with respect to polar bears – if you look at the code, it’s even annotated with appropriate comments: “these are the ears”, “this is the body”, etc.

Models need to demonstrate basic spatial reasoning abilities, to be able to draw fireworks above a city skyline and trains at ground level. Spatial capabilities are needed to be able to properly arrange items on a table and desks in neat rows in a classroom. For our two models in the running example, check out the exchange about making the bear wiggle its right ear: both models are smart enough to figure out that right and left are reversed from the viewer’s vs. the bear’s perspective.

Models need to capture simple physics as a prerequisite for animations that make sense: knowing that snow falls downward due to gravity, that wheels spin around their axles, etc. Check out the gently falling snow in Gemini 3 Pro’s artistic creation in our running example.

Finally, models need to express a sense of aesthetics, which parallels style and tone in text generation. Different models make different choices with respect to the use of gradients, selection of colors, choice of line styles, etc. Beyond coherence and “correctness” at the syntactic and semantic levels, we’d want SVGs to be visually appealing as well!

The final output from Gemini 3 Pro has the bear wiggling its right ear, winking its left eye (from its own perspective), all behind a background of falling snow:

The neat thing about using SVGs as probes into models’ internal knowledge and representations is that the model’s output manifests symbolically (as opposed to pixels). If we examine the code, we’ll see that models frequently generate code comments that annotate the object (see above), so there seems to be an explicit mapping from models’ internal states to their outputs. We can further manipulate generated objects symbolically to probe “understanding” – for example, by asking the model to make the bear’s right ear wiggle, as above.

Now, of course, the counter-argument is that modern frontier models have ingested so much content, including SVGs found on the web, that it may simply be regurgitating memorized SVGs. While we can’t rule out that possibility, it doesn’t detract from the use of SVG generation (and follow-up edits) to probe the capabilities of models. We suspect that Simon Willison would agree, given his somewhat tongue-in-cheek benchmark of asking every model to generate an SVG of a pelican riding a bicycle.

Our thinking appears to be confirmed by recent analyses shared by Anthropic. Using sparse crosscoder features, they were able to pinpoint mechanisms that LLMs develop to “perceive” properties of text like linebreaking constraints. Surprisingly, the same methods can also identify higher-level semantic concepts in SVGs such as eyes and ears.

To summarize: SVG generation probes models’ internal knowledge and representations. Generating coherent SVGs requires “understanding” hierarchical and spatial relationships, as well as simple physics… so, SVG generation requires, dare we say… a good “world model”?

A Leaderboard for SVG Generation Capability

We’ve attempted to articulate some of the basic capabilities a model needs to generate coherent and visually appealing SVGs. Of course, different models possess these capabilities to different extents, but that’s why we have a leaderboard!

The Yupp Leaderboard aggregates the preferences of users around the world interacting naturally with AIs for their everyday use cases. Our VIBE Score, a form of Elo score computed using the popular Bradley-Terry model, captures the preferences of our globally distributed users. Since launch, we have maintained leaderboards for text, image, and live models – and today we add to that a leaderboard specifically for SVG generation. Since we have noticed that models often take quite long to generate SVGs, this leaderboard takes latency into account in the rankings by downweighting preferences where the latency differential between model responses is beyond a certain threshold.

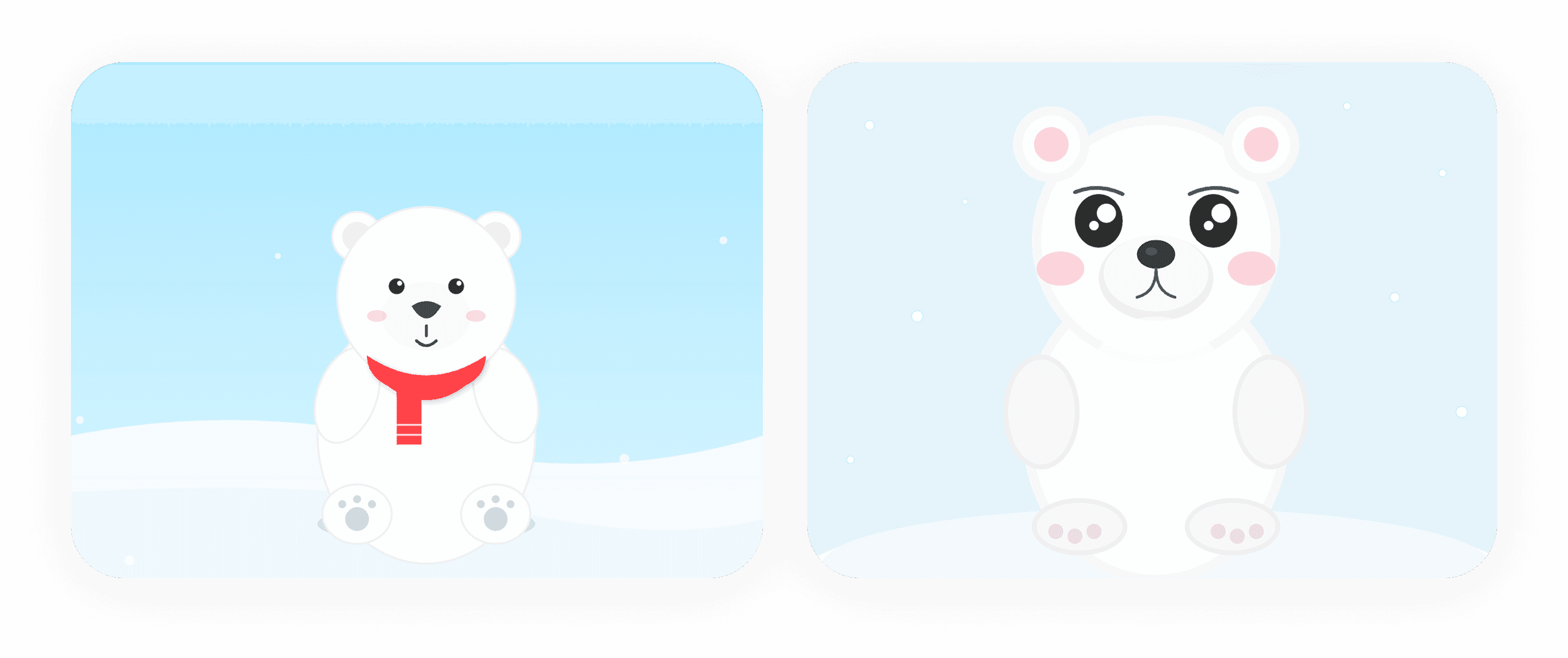

Here’s the current state of the Yupp SVG AI Leaderboard:

We’re still early, of course, and have accumulated only a relatively small number of votes. However, we see that Gemini 3 Pro sits at the top of the rankings, with Claude Opus 4.5 (Thinking) close behind. That’s not surprising, since both are great models all round and excel at a variety of tasks, including coding. In terms of open-weight models, GLM 4.6 performs well, although it still lags proprietary models.

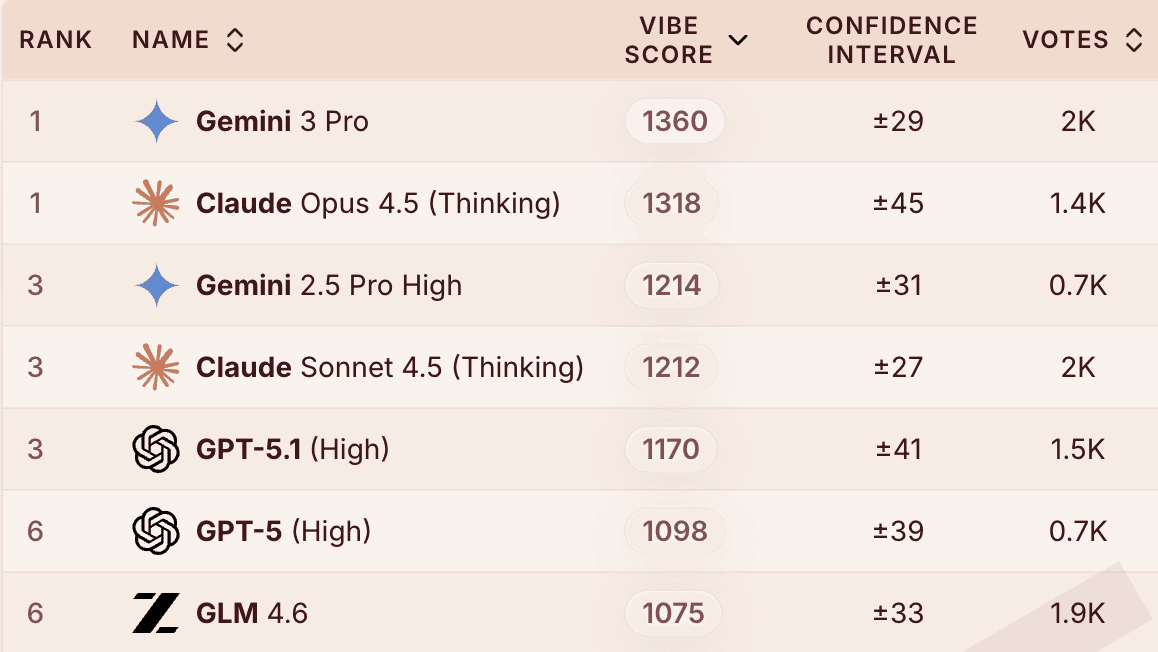

Despite producing some startlingly realistic drawings, results show that frontier models have a ways to go. For example, consider these two steam locomotives, by Gemini 3 Pro and GPT-5.1 (High):

It’s amazing that both models have gotten the gist of a complex piece of machine, but the physics is clearly off: one steam locomotive is levitating above the tracks, while the other appears to be chugging along on the ground.

Another example, we probably wouldn’t want to be driving on this roundabout:

Even the best models have much room for improvement, and we believe we can help!

Sharing an SVG Dataset

As one step to helping model builders and researchers improve the SVG generation – and coding – capabilities of their models, we are sharing with the world a small open dataset of around 3.5K public SVG prompts across 2.8K chats, comprising both single turn and multi-turn interactions. For each turn, we share model responses and user preferences: as a distinct feature of Yupp, users tell us not only which SVG they prefer, but also why. These reasons can be in the form of free text and what we call traits (for example, telling model builders if an SVG is “well-structured” or “broken”).

Hopefully, we’ve convinced you that SVG generation is not only fun, but also useful! We are just getting started, and we wish to engage the community in collaborations that will empower humanity to shape the future of AI. If you’re interested in working on these problems with us, drop us a note at research@yupp.ai and let’s talk!